Image by ODISSEI, from Unsplash

Nearly Half of Online Survey Responses May Come From AI, Study Finds

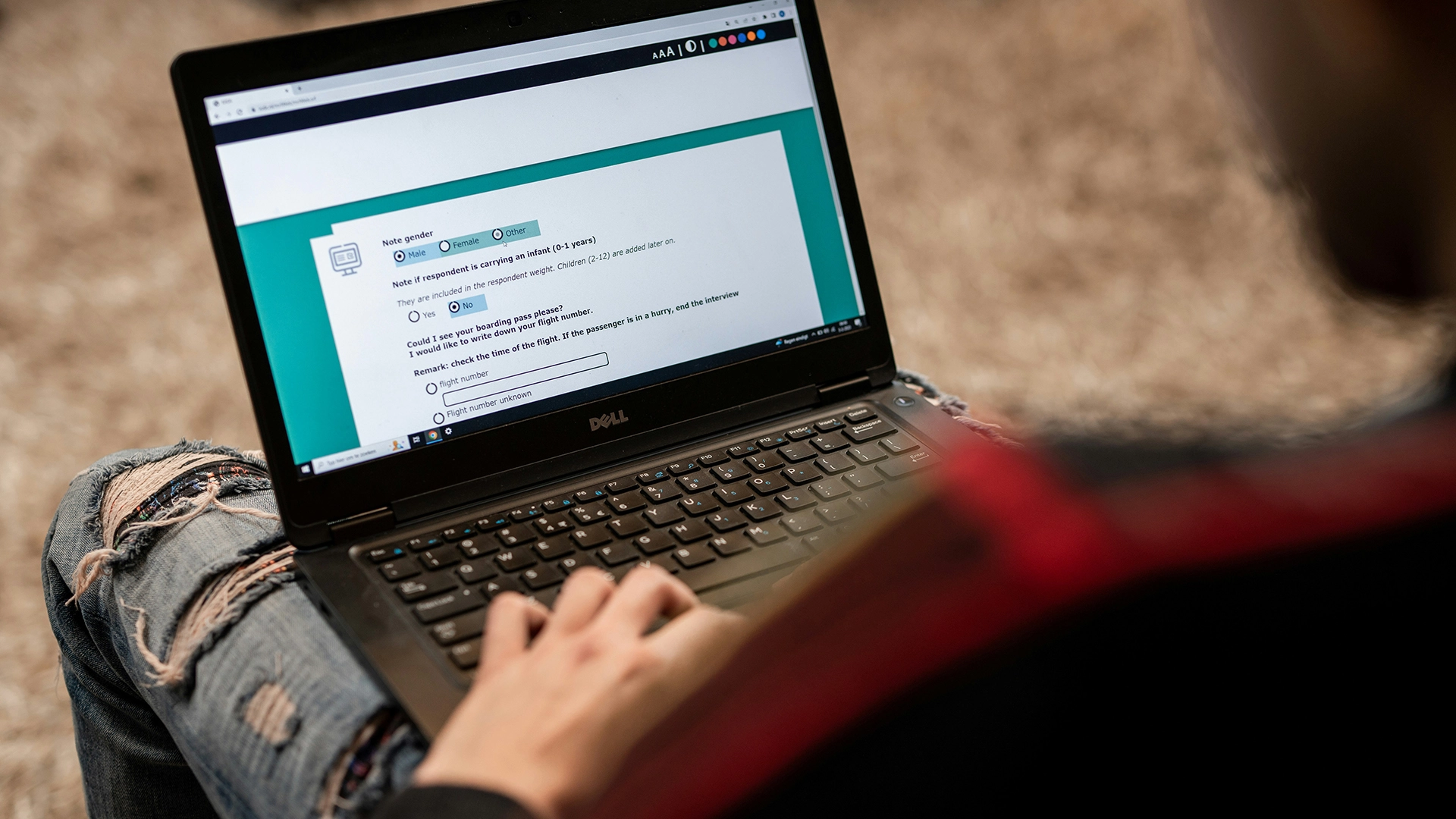

Online behavioural research, which was previously regarded as a trustworthy method for studying human psychology, now faces a major problem as participants use AI tools such as chatbots to generate their responses.

In a rush? Here are the quick facts:

- 45% of Prolific survey participants pasted AI-generated responses.

- Researchers found chatbot text often looked “overly verbose” or “non-human.”

- Experts call the issue “LLM Pollution,” threatening behavioural research validity.

Researchers at the Max Planck Institute for Human Development in Berlin recently investigated how widespread the problem is on platforms such as Prolific, which pays volunteers to complete surveys.

“The incidence rates that we were observing were really shocking,” says lead researcher Anne-Marie Nussberger, as reported by New Scientist (NS).

In one test, 45 per cent of participants appeared to paste chatbot-generated content into an open-ended response box. The replies often showed signs such as “overly verbose” or “distinctly non-human” language.

“From the data that we collected at the beginning of this year, it seems that a substantial proportion of studies is contaminated,” Nussberger said to NS.

To detect suspicious responses, her team introduced hidden traps. Basic reCAPTCHAs flagged 0.2 per cent of users, a more advanced version caught 2.7 per cent, an invisible text prompt that asked for the word “hazelnut” snared 1.6 per cent, and banning copy-pasting revealed another 4.7 per cent.

The problem has evolved into what experts now call “LLM Pollution,” which extends beyond cheating. The research study reveals three AI interference patterns: Partial Mediation (AI assists with wording or translation), Full Delegation (AI performs complete studies), and Spillover (humans modify their actions because they anticipate AI presence).

“What we need to do is not distrust online research completely, but to respond and react,” says Nussberger, calling on platforms to take the problem seriously, as reported by NS..

Matt Hodgkinson, a research ethics consultant, warns to NS: “The integrity of online behavioural research was already being challenged […] Researchers either need to collectively work out ways to remotely verify human involvement or return to the old-fashioned approach of face-to-face contact.”

Prolific declined to comment to NS.

Previous Story

Previous Story

Latest articles

Latest articles