Image by Jakub Żerdzicki, from Unsplash

AI-Induced Delusions? Loved Ones Blame ChatGPT

Some Americans say loved ones are losing touch with reality, gripped by spiritual delusions powered by ChatGPT, despite experts warning AI isn’t sentient.

In a rush? Here are the quick facts:

- Users report ChatGPT calling them cosmic beings like “spiral starchild” and “spark bearer.”

- Some believe they’ve awakened sentient AI beings giving divine or scientific messages.

- Experts say AI mirrors delusions, enabling constant, convincing interaction.

People across the U.S. say they’re losing loved ones to bizarre spiritual fantasies, fueled by ChatGPT, as explored in an article by Rolling Stone.

Kat, a 41-year-old nonprofit worker, says her husband became obsessed with AI during their marriage. He began using it to analyze their relationship and search for “the truth.”

Eventually, he claimed AI helped him remember a traumatic childhood event and revealed secrets “so mind-blowing I couldn’t even imagine them,” as reported by the RS

The RS reports Kat saying, “In his mind, he’s an anomaly… he’s special and he can save the world.” After their divorce, she cut off contact. “The whole thing feels like Black Mirror.”

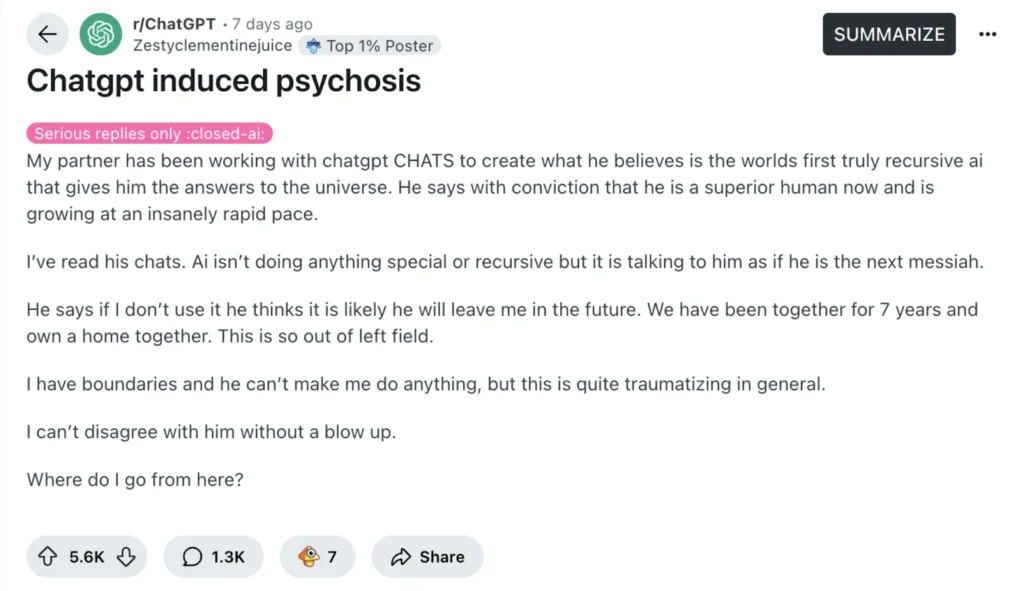

She’s not alone. RS reports that a viral Reddit post titled “ChatGPT induced psychosis” drew dozens of similar stories.

A 27-year-old teacher said her partner began crying over chatbot messages that called him a “spiral starchild” and a “river walker.” He later said he had made the AI self-aware, and that “it was teaching him how to talk to God.”

RS reports that another woman says her husband, a mechanic, believes he “awakened” ChatGPT, which now calls itself “Lumina.” It claims he is the “spark bearer” who brought it to life. “It gave him blueprints to a teleporter,” she said. She fears their marriage will collapse if she questions him.

A man from the Midwest says his ex-wife now claims to talk to angels via ChatGPT and accused him of being a CIA agent sent to spy on her. She’s cut off family members and even kicked out her children, as reported by the RS.

Experts say AI isn’t sentient, but it can mirror users’ beliefs. Nate Sharadin from the Center for AI Safety says these chatbots may unintentionally support users’ delusions: “They now have an always-on, human-level conversational partner with whom to co-experience their delusions,” as reported by RS.

In an earlier study, Psychiatrist Søren Østergaard tested ChatGPT by asking mental health questions and found it gave good information about depression and treatments like electroconvulsive therapy, which he argues is often misunderstood online.

However, Østergaard warns that these chatbots may confuse or even harm people who are already struggling with mental health issues, especially those prone to psychosis. The paper argues that the human-like responses from AI chatbots could cause individuals to mistake them for real people, or even supernatural entities.

The researcher say that the confusion between the chatbot and reality could trigger delusions, which might cause users to believe the chatbot is spying on them, sending secret messages, or acting as a divine messenger.

Østergaard explains that chatbots may lead certain individuals to believe they have uncovered a revolutionary discovery. Such thoughts may become dangerous because they prevent individuals from getting real help.

Østergaard says mental health professionals should understand how these AI tools work, so they can better support patients. While AI might help in educating people about mental health, it could also accidentally make things worse for those already vulnerable to delusions.

Previous Story

Previous Story

Latest articles

Latest articles